7.7 KiB

7.7 KiB

| author | title | date |

|---|---|---|

| Security and Privacy in Data Science (CS 763) | Course Welcome | September 04, 2019 |

Security and Privacy

It's everywhere!

Stuff is totally insecure!

It's really difficult!

What topics to cover?

A really, really vast field

- Things we will not be able to cover:

- Real-world attacks

- Computer systems security

- Defenses and countermeasures

- Social aspects of security

- Theoretical cryptography

- ...

Theme 1: Formalizing S&P

- Mathematically formalize notions of security

- Rigorously prove security

- Guarantee that certain breakages can't occur

Remember: definitions are tricky things!

Theme 2: Automating S&P

- Use computers to help build more secure systems

- Automatically check security properties

- Search for attacks and vulnerabilities

Five modules

- Differential privacy

- Adversarial machine learning

- Crytpography in machine learning

- Algorithmic fairness

- PL and verification

This course is broad!

- Each module could be its own course

- We won't be able to go super deep

- You will probably get lost

- Our goal: broad survey of multiple areas

- Lightning tour, focus on high points

Hope: find a few things that interest you

This course is technical!

- Approach each topic from a rigorous point of view

- Parts of "data science" with provable guarantees

- This is not a "theory course", but...

. . .

Differential privacy

A mathematically solid definition of privacy

- Simple and clean formal property

- Satisfied by many algorithms

- Degrades gracefully under composition

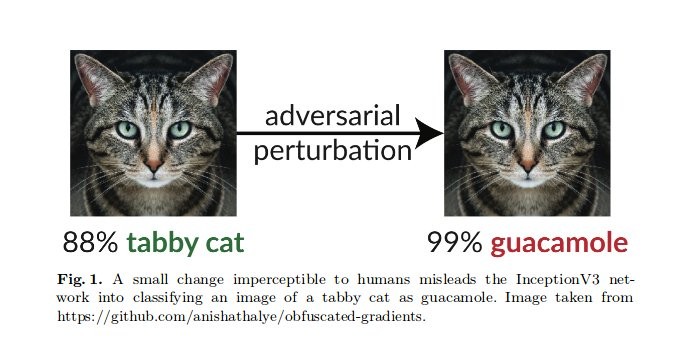

Adversarial machine learning

Manipulating ML systems

- Crafting examples to fool ML systems

- Messing with training data

- Extracting training information

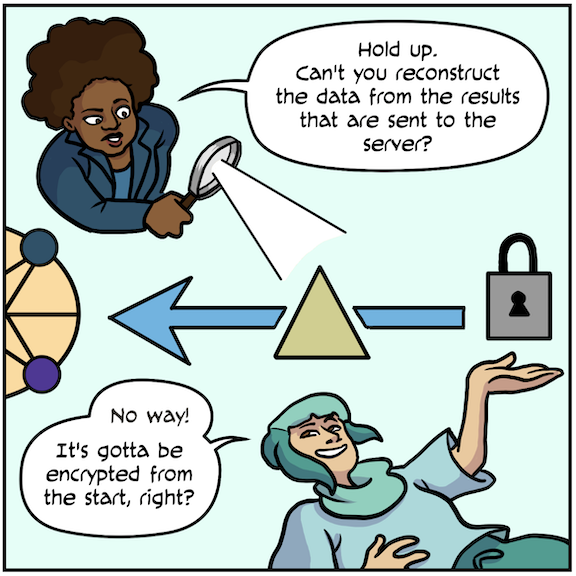

Cryptography in machine learning

Crypto in data science

- Learning models without raw access to private data

- Collecting analytics data privately, at scale

- Side channels and implementation issues

- Verifiable execution of ML models

- Other topics (e.g., model watermarking)

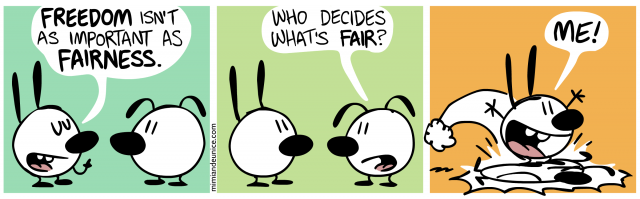

Algorithmic fairness

When is a program "fair"?

- Individual and group fairness

- Inherent tradeoffs and challenges

- Fairness in unsupervised learning

- Fairness and causal inference

PL and verification

Proving correctness

- Programming languages for security and privacy

- Interpreting neural networks and ML models

- Verifying properties of neural networks

- Verifying probabilistic programs

Tedious course details

Lecture schedule

- First ten weeks: lectures MWF

- Intensive lectures, get you up to speed

- M: I will present

- WF: You will present

- Last five weeks: no lectures

- Intensive work on projects

- I will be available to meet, one-on-one

You must attend lectures and participate

Class format

- Three components:

- Paper presentations

- Presentation summaries

- Final project

- Announcement/schedule/materials: on website

- Class mailing list: compsci763-1-f19@lists.wisc.edu

Paper presentations

- In pairs, lead a discussion on group of papers

- See website for detailed instructions

- See website for schedule of topics

- One week before presentation: meet with me

- Come prepared with presentation materials

- Run through your outline, I will give feedback

Presentation summaries

- In pairs, prepare written summary of another group

- See website for detailed instructions

- See website for schedule of topics

- One week after presentation: send me summary

- I will work with you to polish report

- Writeups will be shared with the class

Final project

- In groups of three (or very rarely two)

- See website for project details

- Key dates:

- October 11: Milestone 1

- November 8: Milestone 2

- End of class: Final writeups and presentations

Todos for you

- Complete the course survey

- Explore the course website

- Think about which lecture you want to present and summarize

- Think about which lecture you want to summarize

- Form project groups and brainstorm topics

Signup for slots and projects here

We will move quickly

- First deadline: next Monday, September 9

- Form paper and project groups

- Signup sheet here

- Please: don't sign up for the same slot

- First slot is soon: next Friday, September 13

- Only slot for presenting differential privacy

- I will help the first group prepare

Defining privacy

What does privacy mean?

- Many kinds of "privacy breaches"

- Obvious: third party learns your private data

- Retention: you give data, company keeps it forever

- Passive: you don't know your data is collected

Why is privacy hard?

- Hard to pin down what privacy means!

- Once data is out, can't put it back into the bottle

- Privacy-preserving data release today may violate privacy tomorrow, combined with "side-information"

- Data may be used many times, often doesn't change

Hiding private data

- Delete "personally identifiable information"

- Name and age

- Birthday

- Social security number

- ...

- Publish the "anonymized" or "sanitized" data

Problem: not enough

- Can match up anonymized data with public sources

- De-anonymize data, associate names to records

- Really, really hard to think about side information

- May not even be public at time of data release!

Netflix challenge

- Database of movie ratings

- Published: ID number, movie rating, and rating date

- Attack: from public IMDB ratings, recover names for Netflix data

"Blending in a crowd"

- Only release records that are similar to others

- k-anonymity: require at least k identical records

- Other variants: l-diversity, t-closeness, ...

Problem: composition

- Repeating k-anonymous releases may lose privacy

- Privacy protection may fall off a cliff

- First few queries fine, then suddenly total violation

- Again, interacts poorly with side-information

Differential privacy

- Proposed by Dwork, McSherry, Nissim, Smith (2006)

A new approach to formulating privacy goals: the risk to one’s privacy, or in general, any type of risk... should not substantially increase as a result of participating in a statistical database. This is captured by differential privacy.

Basic setting

- Private data: set of records from individuals

- Each individual: one record

- Example: set of medical records

- Private query: function from database to output

- Randomized: adds noise to protect privacy

Basic definition

A query Q is (\varepsilon, \delta)-differentially private if for every two

databases db, db' that differ in one individual's record, and for every

subset S of outputs, we have:

\Pr[ Q(db) \in S ] \leq e^\varepsilon \cdot \Pr[ Q(db') \in S ] + \delta